College Freshmen are More Godless Than Ever

In the last 30 years, college students have become much less religious. The fraction who say they have no religious affiliation has more than tripled, from about 10% to 34%. And the fraction who say they have attended a religious service in the last year fell from more than 85% to 66%.

I’ve been following this trend for a while, using data from the CIRP Freshman Survey, which has surveyed a large sample of entering college students since 1966.

The most recently published data is from “95,505 first-time, full-time freshmen entering 148 baccalaureate institutions” in Fall 2019.

Of course, college students are not a representative sample of the U.S. population. Furthermore, as rates of college attendance have increased, they represent a different slice of the population over time. Nevertheless, surveying young adults over a long interval provides an early view of trends in the general population.

Religious preference

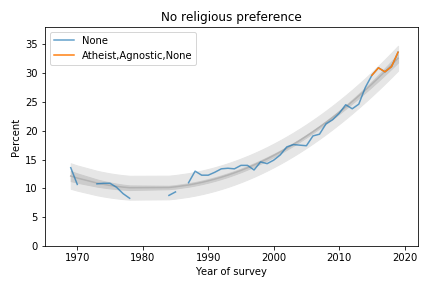

Among other questions, the Freshman Survey asks students to select their “current religious preference” from a list of seventeen common religions, “Other religion,” “Atheist”, “Agnostic”, or “None.”

The options “Atheist” and “Agnostic” were added in 2015. For consistency over time, I compare the “Nones” from previous years with the sum of “None”, “Atheist” and “Agnostic” since 2015.

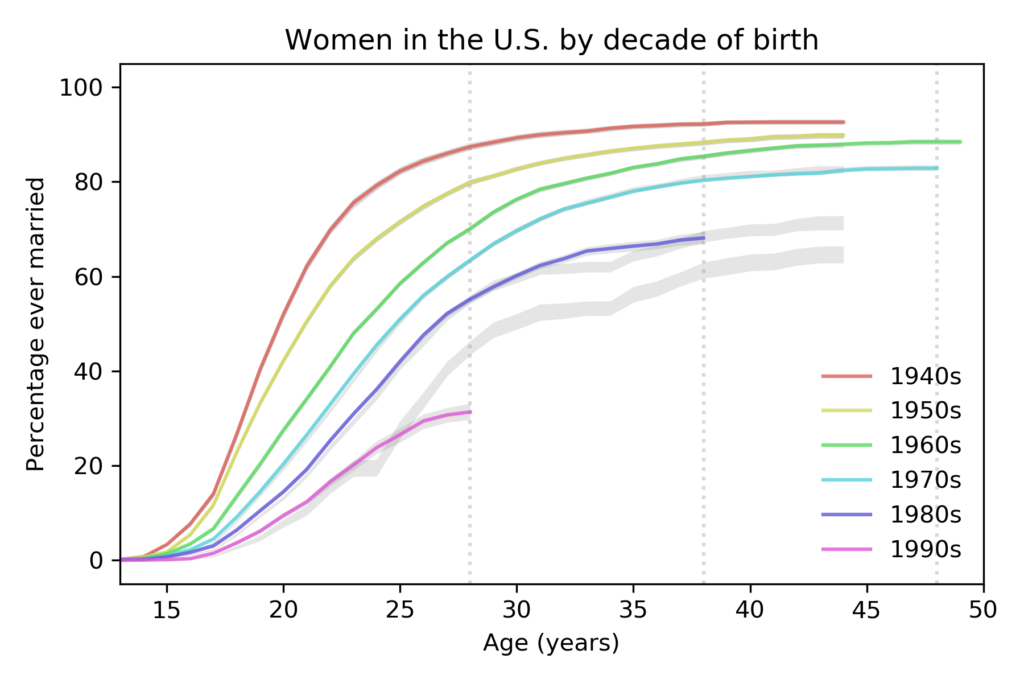

The following figure shows the fraction of Nones from 1969, when the question was added, to 2019, the most recent data available.

The blue line shows data until 2015; the orange line shows data from 2015 through 2019. The gray line shows a quadratic fit. The light gray region shows a 95% predictive interval.

The quadratic model continues to fit the data well and the most recent data point is above the trend line, which suggests that the “rise of the Nones” is still accelerating.

Attendance

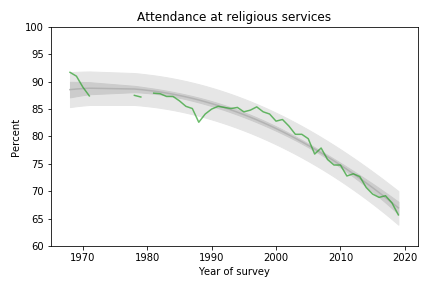

The survey also asks students how often they “attended a religious service” in the last year. The choices are “Frequently,” “Occasionally,” and “Not at all.” Respondents are instructed to select “Occasionally” if they attended one or more times, so a wedding or a funeral would do it.

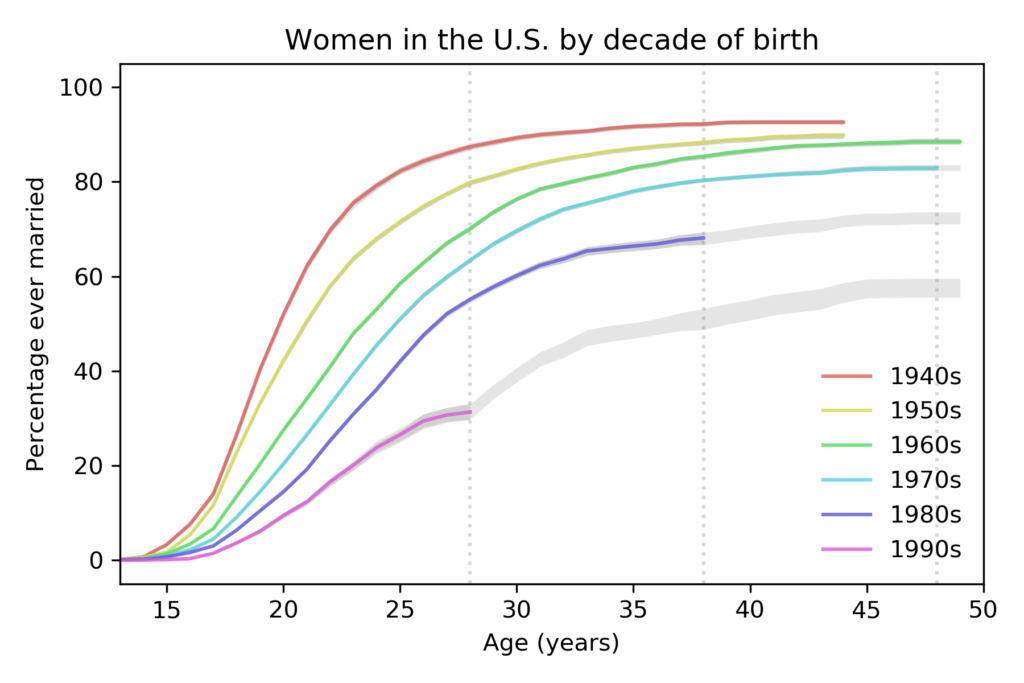

The following figure shows the fraction of students who reported any religious attendance in the last year, starting in 1968. I discarded a data point from 1966 that seems unlikely to be correct.

About 66% of incoming college students said they attended a religious service in the last year, an all-time low in the history of the survey, and down more than 20 percentage points from the peak.

This curve is on trend, with no sign of slowing down.

Gender Gap

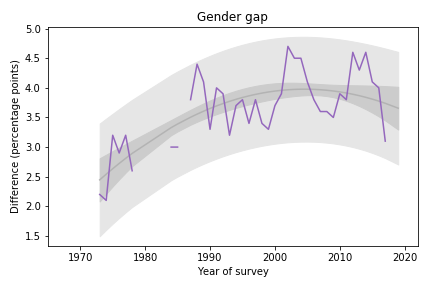

Female students are more religious than male students. The following graph shows the gender gap over time, that is, the difference in percentages of male and female students with no religious affiliation.

The gender gap was growing until recently. It has shrunk in the last 3-4 years, but since it varies substantially from year to year, it is hard to rule out random variation.

Data Source

The American Freshman: National Norms Fall 2019

Stolzenberg, Aragon, Romo, Couch, McLennon, Eagan, and Kang,

Higher Education Research Institute, UCLA, June 2020

This and all previous reports are available from the HERI publications page.