Political alignment and beliefs about homosexuality

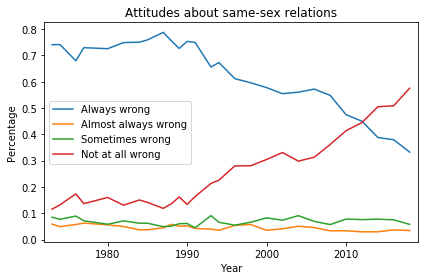

In the United States, beliefs and attitudes about homosexuality have changed drastically over the last 50 years. In 1972, 74% of U.S. residents thought sexual relations between two adults of the same sex were “always wrong”, according to results from the General Social Survey (GSS). In 2018, that fraction was down to 33%, and another 58% thought same-sex relations were “not wrong at all”.

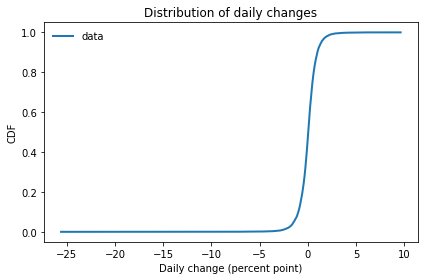

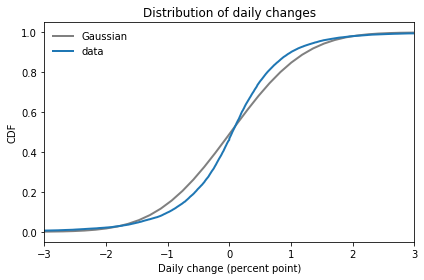

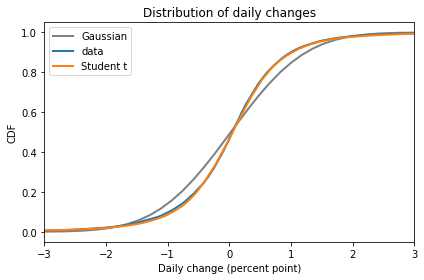

Here’s what the distribution of responses looks like over the duration of the survey:

In the late 1980s, the fraction of “always wrong” responses started dropping, being replaced almost entirely with “not at all wrong”. Respondents who chose “almost always wrong” or “sometimes wrong” have always been a small minority.

Political alignment

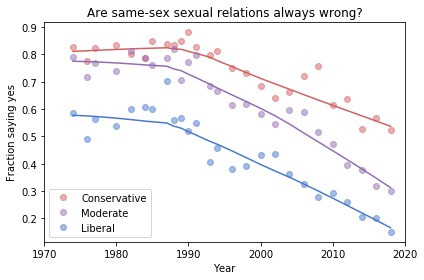

As you might expect, these responses are related to political alignment, that is, to whether respondents describe themselves as liberal, conservative, or moderate.

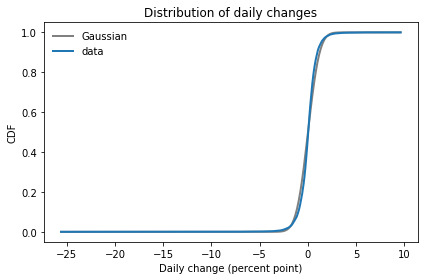

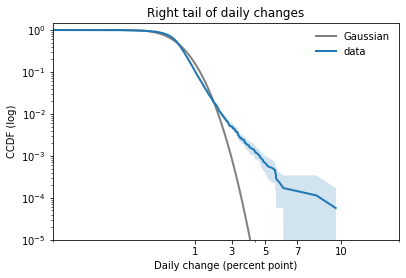

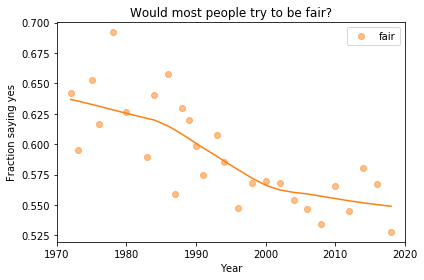

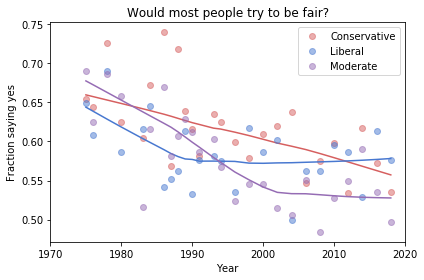

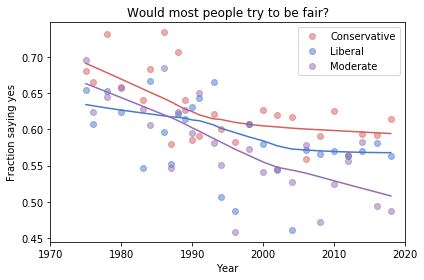

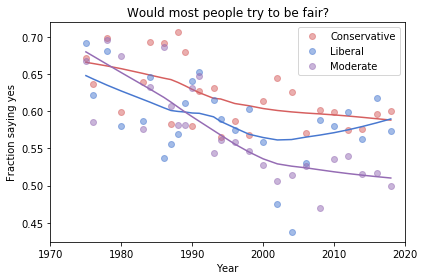

The following figure shows the fraction of “always wrong” responses over time, grouped by political alignment:

The circles in this figure show the observed percentages in each group during each year. The lines show a smooth curve computed by local regression.

Unsurprisingly, people who consider themselves conservative are consistently more likely than liberals to believe homosexuality is wrong. And moderates fall somewhere between liberals and conservatives.

What might be more surprising is how conservative self-described liberals were in 1972: almost 60% of them thought homosexuality was always wrong.

You might also be surprised at how liberal self-described conservatives are now: the fraction who think homosexuality is wrong is down to 60%. In other words, conservatives now are as liberal as liberals were in 1972.

The more things change…

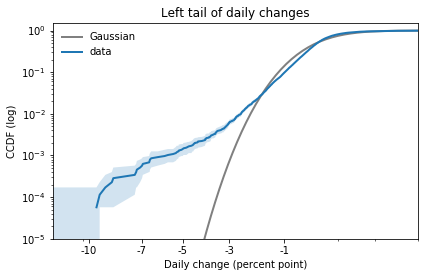

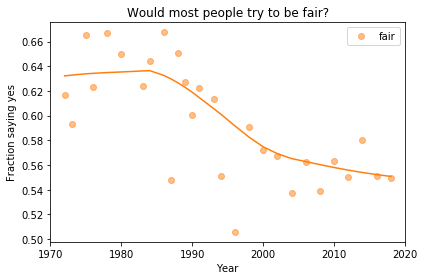

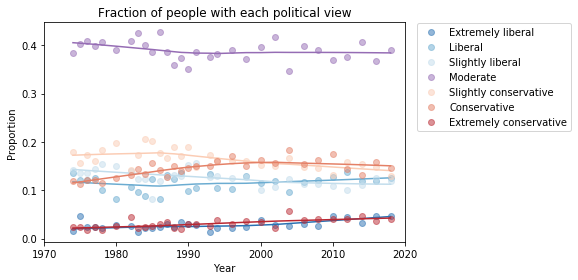

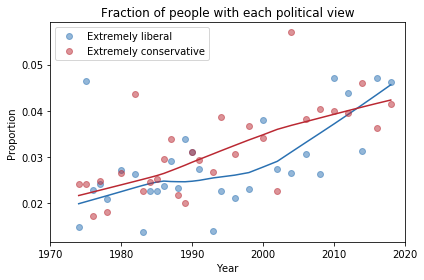

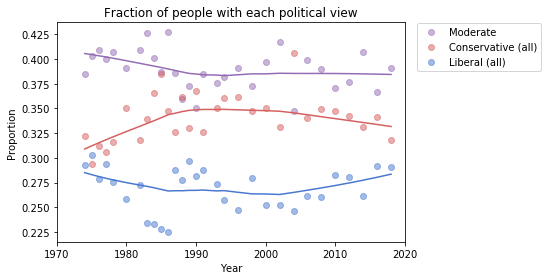

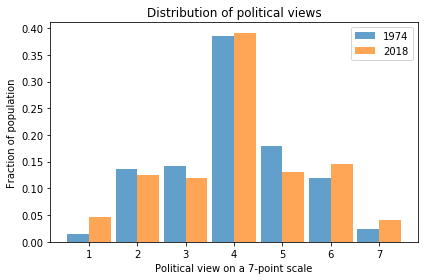

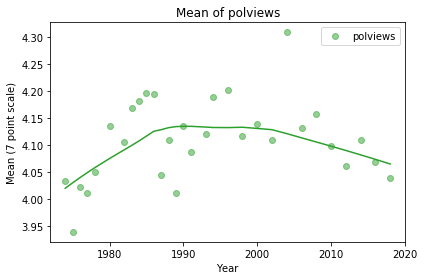

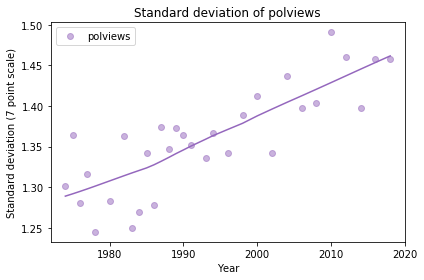

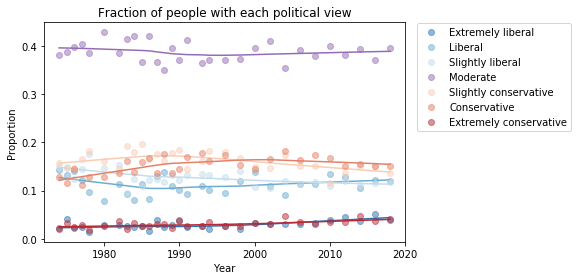

As we saw in a previous article, the fractions of liberals and conservatives do not change much over time. The following figure shows the proportions for GSS respondents:

I conjecture that people describe themselves relative to a perceived center of mass of public opinion. If they are more conservative than what they think is the mean, they are more likely to say they are “conservative”.

But what that means, in terms of beliefs and attitudes, changes over time. And with some issues, it changes quite fast.

The data and code I used for this article are in this GitHub repository. If you would like to run the same analysis with other variables in the GSS, you can run this Jupyter notebook.