Stop worrying and love the black box

In many engineering classes, computational methods are treated with fear, uncertainty, and doubt. At the same time, analytic methods are presented as if they were magic.

I

They warn me that students have to know how these methods work in order to use them correctly; otherwise they are likely to produce nonsense results and accept them blindly.

And if they let students use computational tools at all, the order of presentation is usually “bottom-up”, that is, a lot of “how it works” before “what it does”, and not much “why you should care”.

In my books and classes, we often got “top-down”, learning to use tools first, and opening the hood only when it’s useful. It’s like learning to drive; knowing about internal combustion engines does not make you a better driver.

But a lot of people don’t like that analogy. Recently one of the good people I follow on Twitter wrote, “No, doing fancy analyses without understanding the basic statistical principles isn’t like driving a car without knowing the mechanics. It’s like driving a car while heavily intoxicated, being in all kinds of accidents without knowing it.”

I replied, “I don’t think there is a general principle here. Sometimes you can use black boxes safely. Sometimes you have to know how they work. Sometimes knowing how they work doesn’t actually help.”

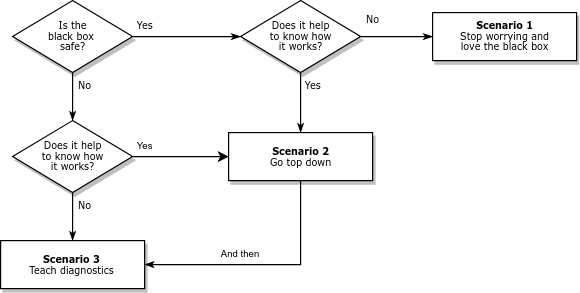

So how do we know which scenario we’re in, and what should we do about it? I suggest the following flow chart:

Many black boxes can be used safely; that is, they produce accurate results over the range of relevant problems. In that case, we should ask whether it (really) helps to know how they work. In Scenario 1, the answer is no; we can stop worrying, stop teaching how it works, and use the time we save to teach more useful things.

Of course, some black boxes have sharp edges. They work when they work, but when they don’t, bad things happen. In that case, we should still ask whether it helps to know how they work. In Scenario 3, the answer is no again. In that case, we have to teach diagnosis: What happens when the black box fails? How can we tell? What can we do about it? Often we can answer these questions without knowing much about how the method works.

But sometimes we can’t, and students really need to open the hood. In that case (Scenario 2 in the diagram) I recommend going top down. Show students methods that solve problems they care about. Start with examples where the methods work, then introduce examples where they break. If the examples are authentic, they motivate students to understand the problems and how to fix them.

With this framework, I can explain more concisely my misgivings about how computational methods are taught:

The engineering curriculum is designed on the assumption that we are always in Scenario 2, but Scenarios 1 and 3 are actually more common.